Announcing General Availability of Azure AI Content Safety

Microsoft has announced a great functionality "Azure AI Content Safety". This information can be found at Microsoft Azure AI Blog.

This new service helps you detect and filter harmful user-generated and AI-generated content in your applications and services. Content Safety includes text and image detection to find content that is offensive, risky, or undesirable, such as profanity, adult content, gore, violence, hate speech, and more. You can also use our interactive Azure AI Content Safety Studio to view, explore, and try out sample code for detecting harmful content across different modalities.

Here is a deeper dive into key features:

- Multilingual Proficiency: In today's globalized digital ecosystem, content is produced in countless languages. Azure AI Content Safety is equipped to handle and moderate content across multiple languages, creating a universally safe environment that respects regional nuances and cultural sensitivities.

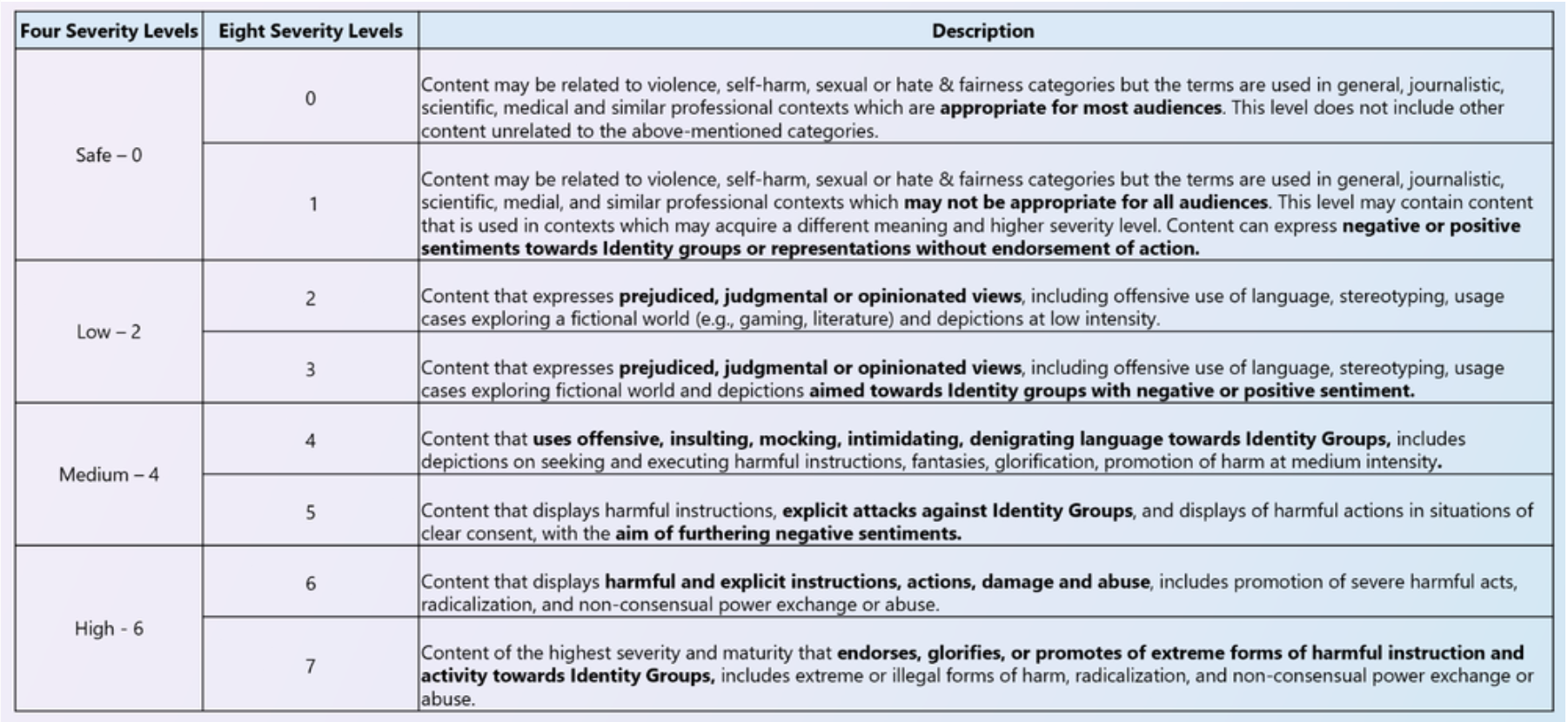

- Severity Indication: Microsoft's content safety product offers a unique 'Severity' metric, which provides an indication of the severity of specific content on a scale ranging from 0 to 7. This metric is designed to cater to different user needs, offering both four-level granularity for quick testing and eight-level granularity for advanced analysis. By providing businesses with this flexibility, they can swiftly assess the level of threat posed by certain content and develop appropriate strategies to address it. This feature empowers businesses to make informed decisions and take proactive measures to ensure the safety and integrity of their digital environment.

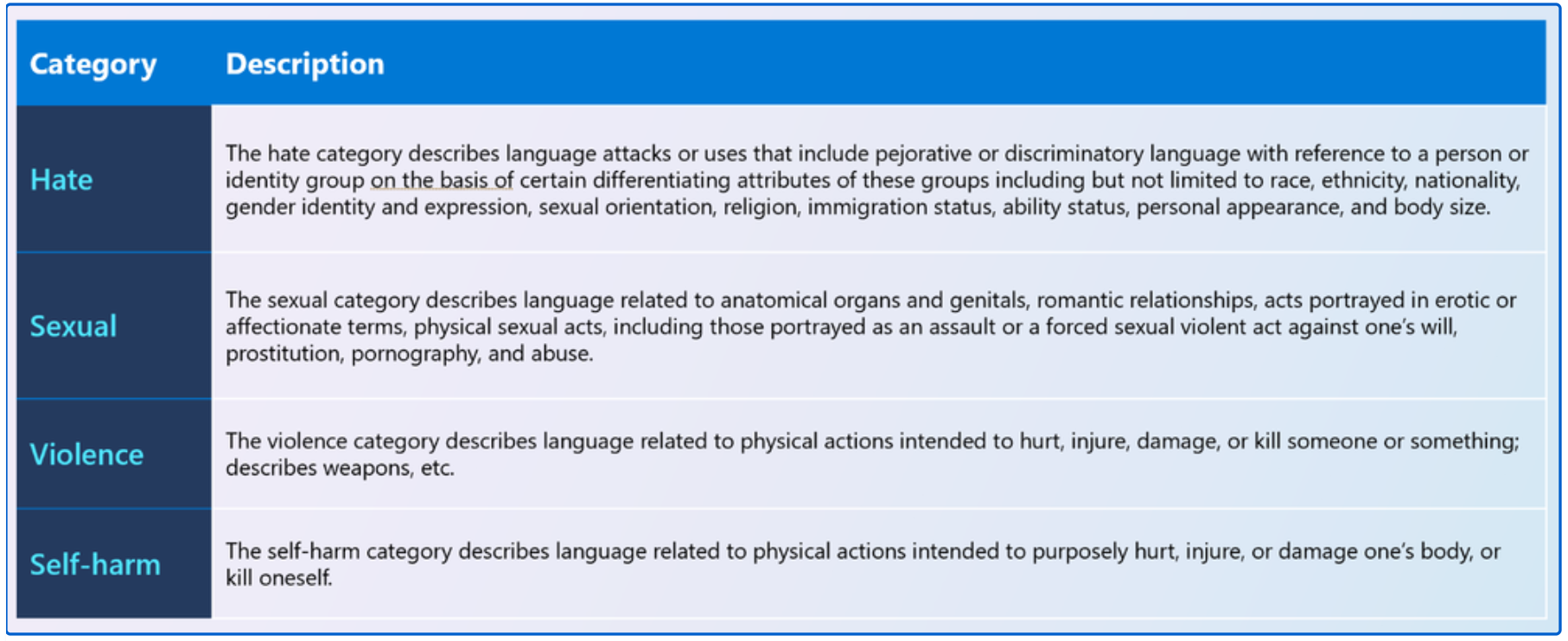

- Multi-Category Filtering: Azure AI Content Safety can identify and categorize harmful content across several critical domains.

- Hate:Content that promotes discrimination, prejudice, or animosity towards individuals or groups based on race, religion, gender, or other identity defining characteristics;

- Violence: Content displaying or advocating for physical harm, threats, or violent actions against oneself or others;

- Self-Harm: Material that depicts, glorifies, or suggests acts of self-injury or suicide;

- Sexual: Explicit or suggestive content, including but not limited to, nudity and intimate media.

- Hate:Content that promotes discrimination, prejudice, or animosity towards individuals or groups based on race, religion, gender, or other identity defining characteristics;

- Text and image detection: In addition to text, digital communication also relies heavily on visuals. Azure AI Content Safety offers image features that use advanced AI algorithms to scan, analyze, and moderate visual content, ensuring 360-degress comprehensive safety measures.

Learn more Azure AI Content Safety is a powerful tool which enables content flagging for industries such as Media & Entertainment, and others that require Safety & Security and Digital Content Management. Microsoft eagerly anticipate seeing your innovative implementations!

- ACOM page: https://aka.ms/acs-acom

- Documentation: https://aka.ms/acs-doc

- Studio: https://aka.ms/acsstudio

- API reference: https://aka.ms/acs-api